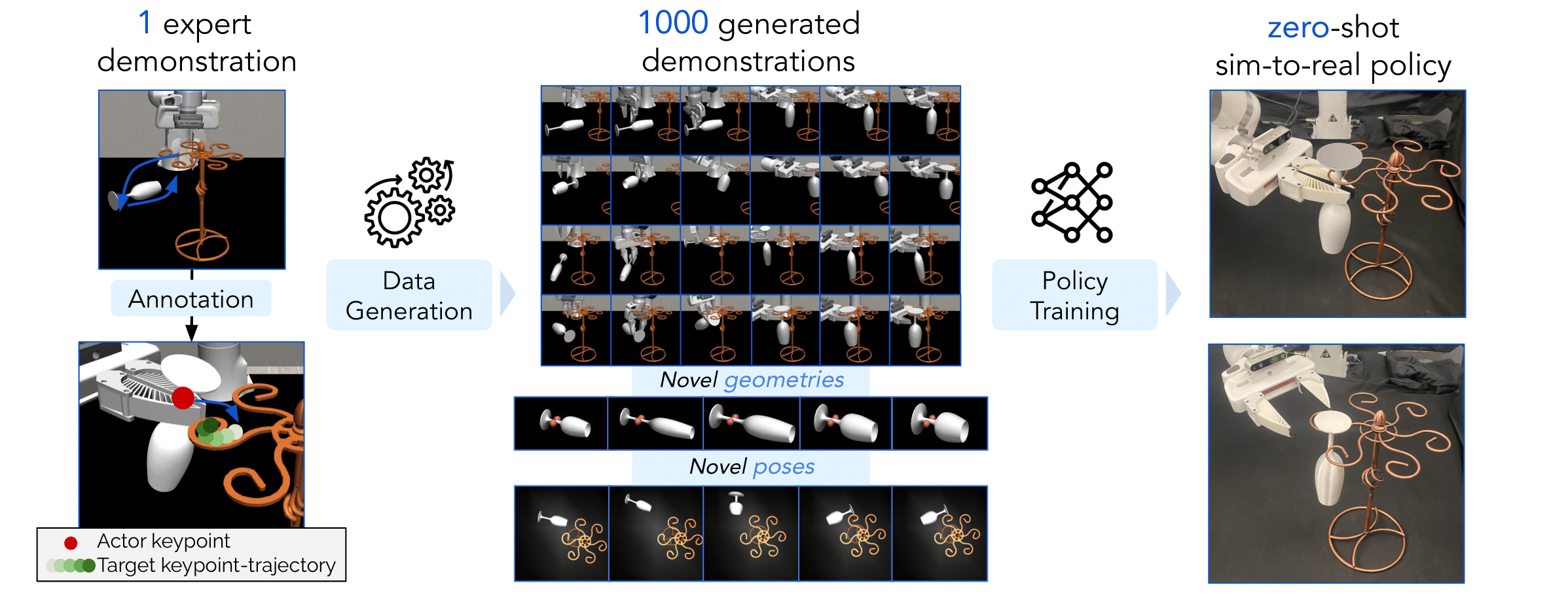

CP-Gen generates data for new object poses, scales, and geometries when given a single demonstration, and enables zero-shot sim2real transfer..

Abstract

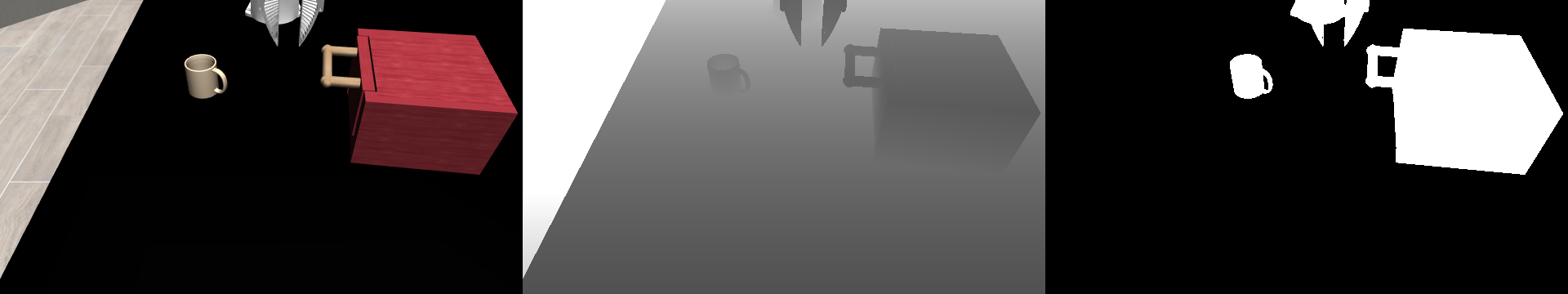

CP-Gen: Large-scale demonstration data has powered key breakthroughs in robot manipulation, but collecting that data remains costly and time-consuming. To this end, we present Constraint-Preserving Data Generation (CP-Gen), a method that uses a single expert trajectory to generate robot demonstrations containing novel object geometries and poses. These generated demonstrations are used to train closed-loop visuomotor policies that transfer zero-shot to the real world. Similar to prior data-generation work focused on pose variations, CP-Gen first decomposes expert demonstrations into free-space motions and robot skills. Unlike prior work, we achieve geometry-aware data generation by formulating robot skills as keypoint-trajectory constraints: keypoints on the robot or grasped object must track a reference trajectory defined relative to a task-relevant object. To generate a new demonstration, CP-Gen samples pose and geometry transforms for each task-relevant object, then applies these transforms to the object and its as sociated keypoints or keypoint trajectories. We optimize robot joint configurations so that the keypoints on the robot or grasped object track the transformed keypoint trajectory, and then motion plan a collision-free path to the first optimized joint configuration. Using demonstrations generated by CP-Gen, we train visuomotor policies that generalize across variations in object geometries and poses. Experiments on 16 simulation tasks and four real-world tasks, featuring multi-stage, non-prehensile and tight-tolerance manipulation, show that policies trained using our method achieve an average success rate of 77%, outperforming the best base line which achieves an average success rate of 50%.

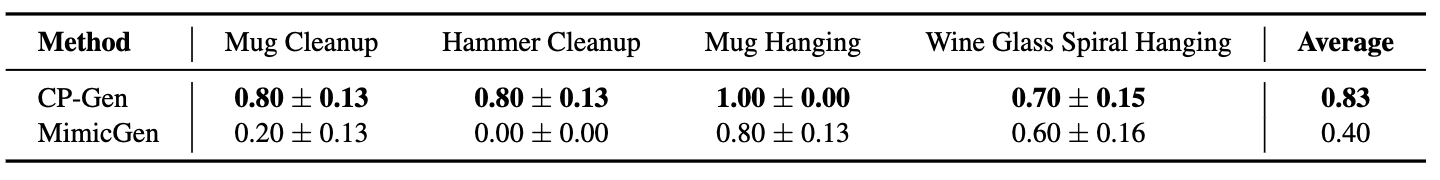

Real World Evaluations

Quantitative Results

CP-Gen for Geometry and Spatial Generalization

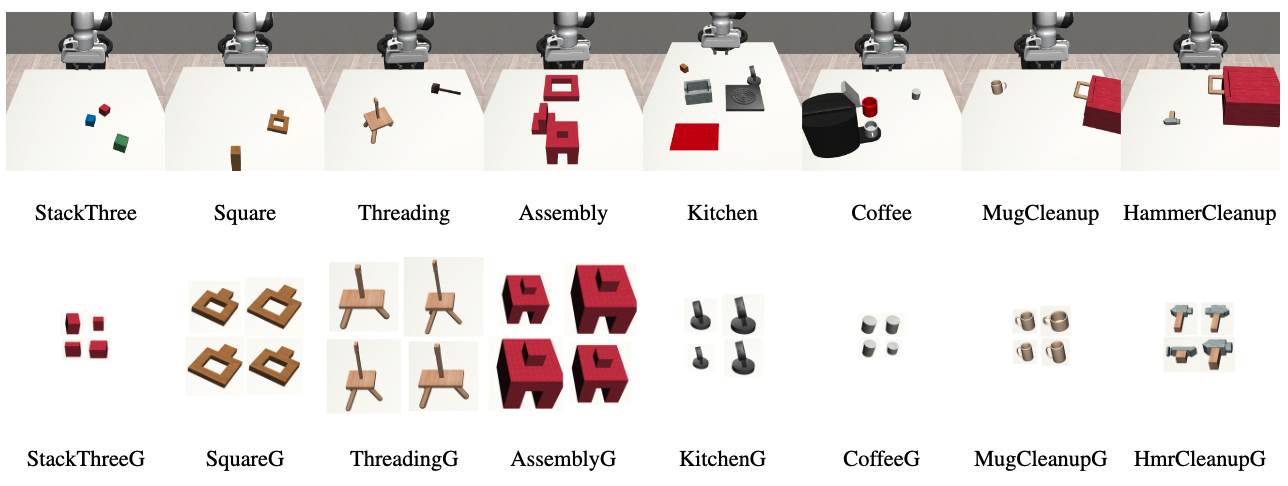

Simulation Environments

Geometry Generalization Reset Distribution

Coffee

Kitchen

HammerCleanup

MugCleanup

ThreePieceAssembly

Square

StackThree

Threading

CP-Gen Generated Trajectories

Coffee

Kitchen

HammerCleanup

MugCleanup

ThreePieceAssembly

Square

StackThree

Threading

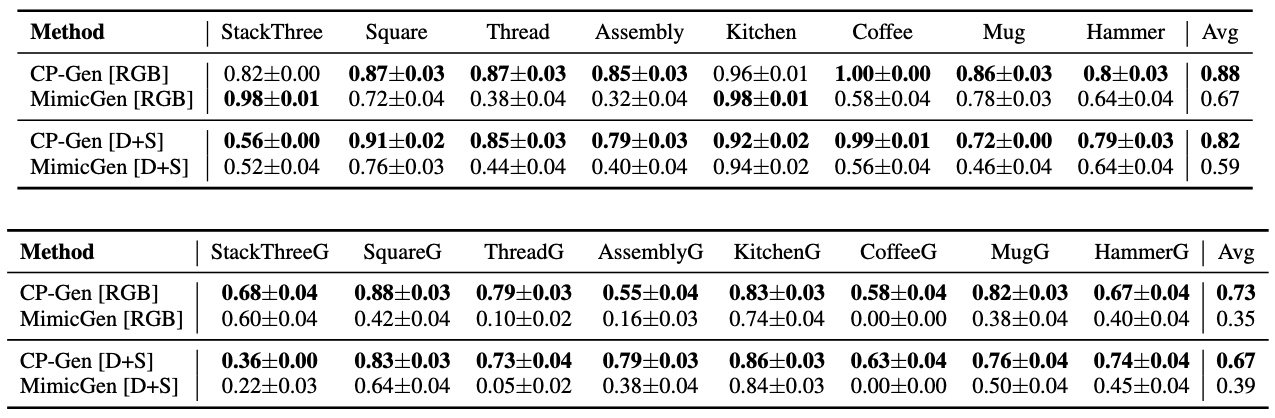

CP-Gen achieves state-of-the-art results on the MimicGen simulation benchmark. On the original MimicGen benchmark (default task variants), CP-Gen achieves an average success rate of 88% compared to MimicGen's 67%. On our custom benchmark containing Geometry Generalization task variants which feature novel object geometries (denoted as TaskG), CP-Gen achieves an average success rate of 70%, outperforming MimicGen's 37% by a margin of 33%. These results highlight CP-Gen's strong generalization not only to pose variations but also to challenging geometric variations. Bolded numbers indicate the best-performing method within each modality group.

Citation

@inproceedings{

lin2025cpgen,

title={Constraint-Preserving Data Generation for Visuomotor Policy Generalization},

author={Kevin Lin and Varun Ragunath and Andrew McAlinden and Aaditya Prasad and Jimmy Wu and Yuke Zhu and Jeannette Bohg},

booktitle={9th Annual Conference on Robot Learning},

year={2025},

url={https://openreview.net/forum?id=KSKzA1mwKs}

}

Acknowledgments

Toyota Research Institute provided funds to support this work. Additionally, this work was partially supported by the National Science Foundation (FRR-2145283, EFRI-2318065), the Office of Naval Research (N00014-24-1-2550), the DARPA TIAMAT program (HR0011-24-9-0428), and the Army Research Lab (W911NF-25-1- 0065). It was also supported by the Institute of Information & Communications Technology Planning & Evaluation (IITP) grant funded by the Korean Government (MSIT) (No. RS-2024-00457882, National AI Research Lab Project). We additionally thank Timothy Chen, Wil Thomason, Zak Kingston, Tyler Lum, Priya Sundaresan, Fanyun Sun, Yifeng Zhu, Zhenyu Jiang, Mingyo Seo, Megan Hu, William Chong, Marion Lepert, and Brent Yi for helpful discussions throughout the project.